159

Tool Creation |Human Agents

158

Tool Creation |Human Agents

Object Looking State

The object looking state defines what happens when an agent chooses to admire an object without touching it. Again, a population survey based on the location and demographic of the architectural project would give the most accurate simulation result within a specific context, however, in the ethos of this simulation tool—where there is no specific context and the tool is designed to be applicable within different typologies—a generic set of actions would provide the most flexibility. To establish these generic actions, we can consider a passage from The Dynamics of Architectural Form, where Rudolf Arnheim states:

“These ‘proxemics’ normals influence also the choice of preferred distances between objects, e.g., the placement of furniture, and they are likely to affect the way people determine and evaluate the distances between buildings. […] We feel impelled to juggle the distances between objects until they look just right because we experience these distances as influencing forces of attraction and repulsion. […] In order for an object to be perceived appropriately, its field of forces must be respected by the viewer, who must stand at the proper distance from it. I would even venture to suggest that it is not only the bulk or height of the object that determines the range of the surrounding field of forces, but also the plainness or richness of its appearance. A very plain façade can be viewed from nearby without offense, whereas one rich in volumes and articulation has more expansive power and thereby asks the viewer to step farther back so that he may assume his proper position, prescribed by the reach of the building’s visual dynamics.”[12]

From this, Arnheim examines the proxemics described by Edward T. Hall (which we have also investigated to establish the personal spaces of our agents), and reasons it within the context of object distances as well as human distances. While Arnheim is specifically referring to the distances between the placement of furniture in this case, by changing the reference point of view, it can be argued that these distances can also be utilized to describe the preferred viewing distances of agents when admiring an object. These viewing distances can then be influenced by factors such as the size of the object as well “the plainness or richness of its appearance.”

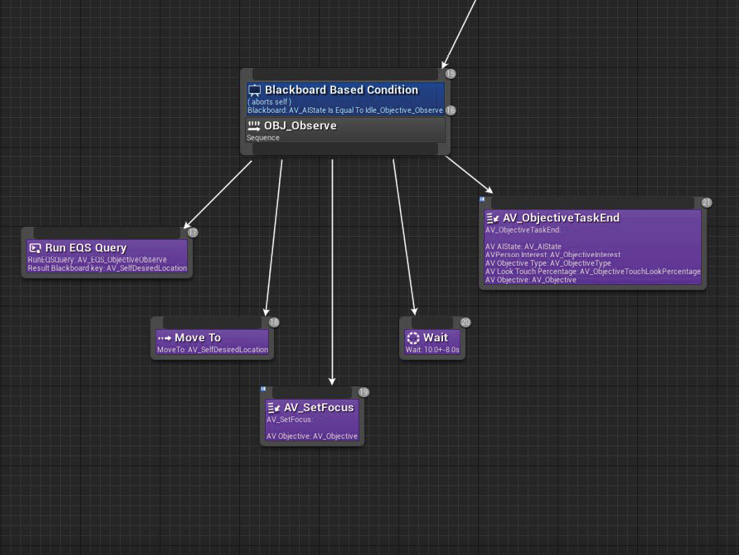

We can then recreate this description by establishing a series of movements within the behavior tree. (Fig. 3.3.18) By utilizing the EQS, we can first determine a random location around the object. (Fig. 3.3.19) From this, we can then tell the agent to move there, turn towards the object, and admire it within a randomized timeframe. After this timeframe, the agent would then pick a different location around the object and repeat the process. From this, we can establish the following steps:

Behavior Tree Object-Looking state tasks

Screen-captured by Author.

Find location around the object with EQS.

Move to location.

Turn towards the object.

Loop observing animation for randomized time interval.

Repeat action from step 1, or set AgentState to something else (such as ObjectInteract or AgentDefault).

This series of actions would give the illusion of the agents trying to “juggle the distances between objects until they look just right.” (Fig. 3.3.20) Throughout this process, the agent may also decide to interact with the object or become bored with the object. While this is by no means a perfect representation, it does provide a relatively simple and generic approach for portraying this action at this stage of tool creation.