145

Tool Creation |Human Agents

144

Tool Creation |Human Agents

Decision Logic

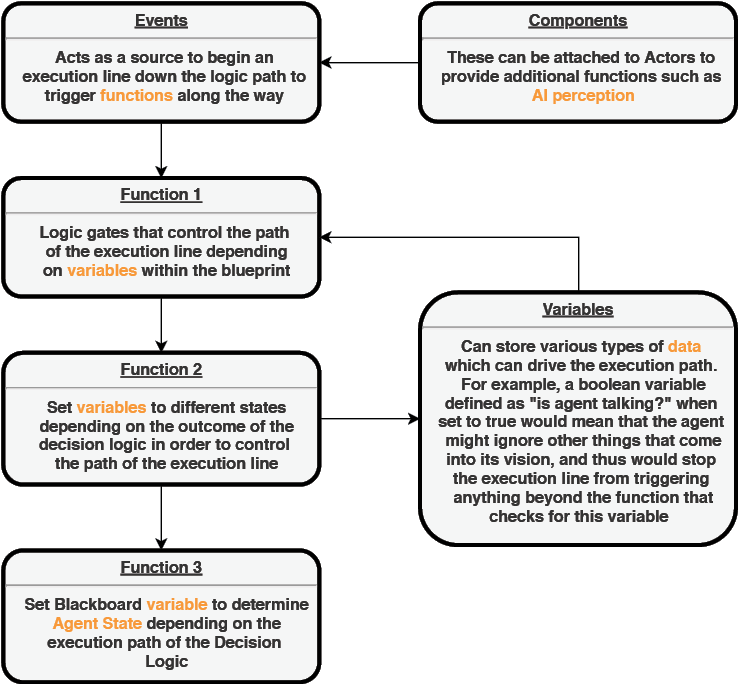

The next level of these systems is the decision logic, which allows our agents to choose an action when they see an object within the environment. This can be accomplished within the AI controller class, which contains an EventGraph that allows a form of visual scripting with various elements such as:[4]

“Events are nodes that are called from gameplay code to begin execution of an individual network within the EventGraph.”[5]

Functions are node graphs within blueprints that can be executed to perform a specific function.[6] These can be utilized throughout the EventGraph to change variables and divert the flow of execution.

“Variables are properties that hold a value or reference an Object or Actor in the world.” [7] These can be utilized to store or reference various types of data within the blueprint.

Components are sub-objects that can be attached to actors. These can be used to give additional functionalities to the actor.[8]

There are certainly many more types of elements within these event graphs, but for the most part, these are the main elements that make up most of this decision logic system. These elements can then be connected to form a decision network, which allows the agents to take a step-by-step approach to establishing their choices. (Fig. 3.3.5) While this may look very complicated, it is a rather straightforward process:

The first step begins with the Event Node. These elements begin the execution of nodes down an individual network of functions, and can be triggered in many ways, including but not limited to every frame, set time interval, when a key is pressed, and in the context of AI perception, whenever the agent sees something.[9]

Once the agent sees something, the AI perception Event Node begins a line of execution down the network where we can utilize a number of Boolean-driven branch nodes to divert the execution line. (Fig. 3.3.6) The Booleans that drive these branch nodes can be defined in various ways, but for this simulation, we can utilize the concept of percentages we defined from Chapter 2.3. With this, we can generate a random number between 0 and 1, where 1 is 100% yes, 0 is 0% yes or 100% no, and 0.56 is 56% yes or 44% no, etc. (Fig. 3.3.7) With this we can perform a series of checks throughout the logic network to determine the final action of the agent. Is the agent seeing an object? If yes, is the agent currently doing something? If no, is the agent interested in this object? If yes, does it want to observe and admire it or interact with it? If interact, then set AgentState to Interact. (Fig. 3.3.8) However, if the agent is currently busy or is not interested in the object, then the execution line will move down and ask if the agent if it is seeing another agent. If yes, is the agent current doing something? And so on. It would then continue these checks for every type of entity the agent comes across. (Fig. 3.3.9)

Simplified visual scripting process within UE4

Illustrated by Author.

This is naturally an oversimplification, as there are other steps required due to the various nuances of UE4, but these are the essential steps required to create these behaviors to give the illusion of choice to the agents. With this, different functions within the blueprint will be triggered depending on the path of this execution, therefore allowing us to define the agent actions based on these paths.