107

Technical Research |Abstracting the Human Systems

106

Technical Research |Abstracting the Human Systems

to achieve on top of their instinctive desires, thus one would need to utilize a plethora of functions to calculate a final velocity vector that determines their movement.

To define action selection within a crowd simulation, one must first identify the individual goals and desires of the people that make up the crowd. These goals and desires will change based on the location and type of space that the person is occupying, but at this stage of prototyping, it is possible to simply define some generic desires as a starting point to approximate human behavior. From here, it is then possible to add in other desires as the conditions change and require it. These can be separated into conscious goals and subconscious desires, which can in turn be calculated separately and added to the individual acceleration vectors of the agents. At the conscious level, they might have goals such as reaching a destination, doing a task, meeting someone, or any combination of these, but at the subconscious level, these people may desire to do their task without walking into objects such as walls, furniture, or other people.

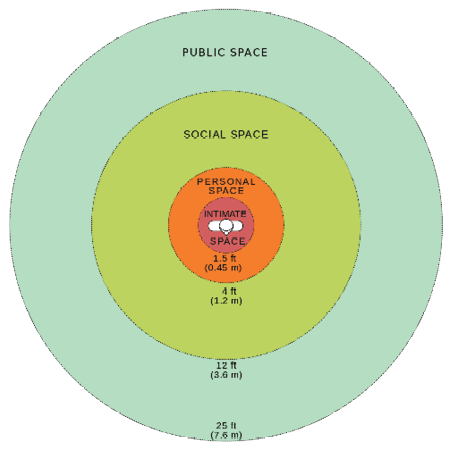

While action selection is mainly responsible for this unconscious level of obstacle interaction, both levels of desire can be influenced by factors such as stress, energy, and comfort. An example of such an influence would be how people might consciously choose to sit down if their energy level is low, but they might also subconsciously take a longer route there to avoid higher traffic areas. The correlation between these attributes and conscious goals are quite clear but becomes less obvious when dealing with subconscious desires. In order to better understand the influence of these attributes, one can investigate proxemics, which is the study of human spatial requirements, and its effects on behavior and social interactions. Edward T. Hall coined this term in 1963 in his book The Hidden Dimensions, in which he explores social and personal spaces and man’s perception of it. Here, he divides spaces into four distinct regions, defining the interpersonal distances of man.[20] (Fig. 2.3.22) Examining these four zones, it can be inferred that the inner zones have a higher role in the subconscious desires of object and people avoidance, whereas the outer zones have a higher role in the conscious desires of tasks and goals. The space within these two inner zones is called personal space, which can be described as a space around a person they associate as theirs. Entering such spaces often indicates familiarity, therefore, it is logical to assume that most individuals prefer to be able to control these spaces, and that in an unfamiliar crowded public realm, preserving personal space becomes an important desire.

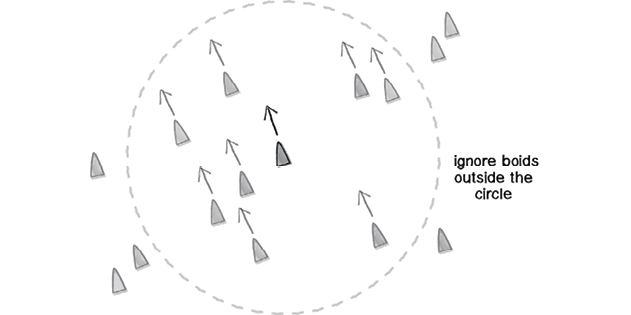

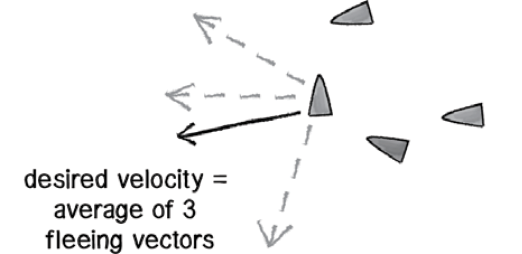

This notion of personal space is a useful consideration not only in how it influences human behavior but also how easily it can be described by scalar variables as distances around the person. With this deduction, one can utilize these defined personal distances as a radius in which the agents can interact within the simulation. Translating this into machine logic, it can be coded that when another agent or object goes within the agent’s personal space, a steering force will be calculated in the opposite direction to steer them away, or towards the other

20 Edward T. Hall, The Hidden Dimension (Garden City, NY: Doubleday, 1966), 107-122.

Edward T Hall’s Interpersonal Distances of man

By WebHamster, “File:Personal Space.svg,” Diagram Representation of Personal Space Limits, According to Edward T. Hall’s Interpersonal Distances of Man, March 8, 2009, Wikimedia Commons, accessed December 25, 2019, https://commons.wikimedia.org/wiki/File:Personal_Space.svg.

We can use these defined personal spaces to determine the area around the agent in which they will be affected

From Shiffman, “Chapter 6. Autonomous Agents.”

Utilizing Fleeing behaviour to avoid other agents that may have entered the Agent’s personal space

From Shiffman, “Chapter 6. Autonomous Agents.”