267

Next Steps |Photorealism

266

Next Steps |Photorealism

Photogrammetry recreates an object by capturing multiple images of the object in various angles

From Joseph Azzam, “Everything You Need to Know about Photogrammetry I Hope,” January 10, 2017, Gamasutra, accessed January 1, 2020, https://www.gamasutra.com/blogs/JosephAzzam/20170110/288899/Everything_You_Need_to_Know_about_Photogrammetry_I_hope.php.

Photorealism

The next set of improvements to explore for this pipeline would be photorealistic rendering outputs. With hardware becoming increasing powerful and software becoming increasingly sophisticated, more photorealistic rendering methods are becoming increasingly accessible. While the default visuals of UE4 provided adequate results, it is only the beginning of what this game engine is capable of. This step of improvements at the base would require investigating various ways of material creation, but to fully utilize this software, we would also need to investigate more advanced photorealism methods such as photogrammetry and real-time ray tracing. In doing so, we can further solidify this framework as an efficient way to both analyze and visualize the space within the same workflow.

Photogrammetry

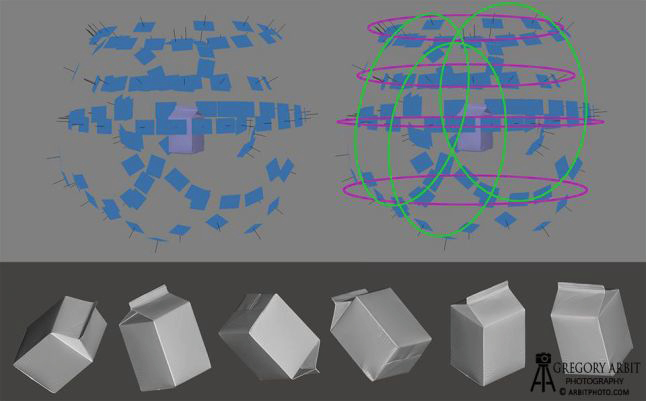

Photogrammetry can be defined as “the art, science and technology of obtaining reliable information about physical objects and the environment through the process of recording, measuring and interpreting photographic images and patterns of electromagnetic radiant imagery and other phenomena.”[1] Simply put, this allows us to create exceptionally detailed 3D models by taking multiple images of the subject from different angles. (Fig. 5.2.1) While this concept is not new, commercial photogrammetry software has only recently become easily accessible. By utilizing software such as Agisoft Photoscan, Reality Capture, and Pix4d, we can relatively easily create realistic assets that can in turn be used to create visualizations that are almost visually on par with traditional ray-traced methods at a fraction of the rendering time. [2] (Fig. 5.2.2 - 3) These visualizations are approaching a level of photorealism that is hard to distinguish from real life, and as such, the implications of this within architectural visualization are undoubtedly significant and will be worth investigating.

Real-time ray tracing

As already mentioned in Chapter 1.3: Advent and Progression of the Game Engine, ray tracing is a rendering method where the paths of simulated “light rays” bouncing throughout the environment are traced back to the source of the camera.[3] This technique is what allows traditional architectural rendering methods to outperform game engines in terms of photorealistic representation as they have the luxury of simulating the lighting based on real-world physics instead of faking it with textures. Real-time ray tracing then becomes somewhat self-explanatory in its benefits within architectural visualization.

This new technology allows us to output renderings at a much faster pace compared to traditional methods, and as such has the potential to further blur the line between virtual interactive visualizations and reality. While this technology is still relatively new, more and more software—such as UE4, albeit this feature is still in beta—are beginning to support it, with demos

already showing the capabilities of this new technology.[4] (Fig. 5.2.4) This, along with Nvidia’s recent introduction of ray tracing specific RTX graphics cards shows that the future of real-time ray tracing is right around the corner and will only become more powerful as technology improves.